The Anatomy of a Data-Driven VC Sourcing Stack

(generated via WordCloud)

What is sourcing?

To understand my motivation for building an automated, data-driven investment sourcing system, I want to first explain what sourcing is in the context of venture capital.

Sourcing is the process in which investors look for high-potential startups that fit within a firm’s specific investment strike zone (stage, geography, sector, etc.). Having high-quality deal flow directly affects every subsequent stage of the investment process—from due diligence to actually winning the deal. Ultimately, a venture capital firm’s performance depends on its selection of investments that come directly from the top of funnel. This article is the first part of a mini-series outlining my journey of building an automated sourcing system.

Crafting an investment hypothesis for open-source startups

A good chunk of the startups I meet fall within the broad sphere of Cloud Infrastructure. Within the early-stage Cloud Infrastructure landscape, many companies in recent years have adopted an open-source, product-led growth go-to-market (GTM) motion. Among other benefits, this allows customers to have an easy “on-ramp” to try new software and have the confidence that they can still access the source code to a product if the startup shuts down. These startups therefore have at least some subset of their product open-sourced on GitHub—the perfect goldmine for VCs to find potential fund-returning companies.

After meeting with over 250 startups (and having studied the pitch decks of probably over 1000 startups) the past year since I began full-time investing, I developed a heuristic for what to look for in early-stage, pre-revenue, open-source startups. I’ve found that open-source traction tends to precede significant commercial traction—MongoDB, at the time of their S-1 filing, famously had 4300 customers against over 30 million downloads. Therefore, I would need look at “proxy” signals for future success. Some of the signals (non-exhaustive) that I looked for when sourcing include:

Number of GitHub stars to signify overall repository popularity

Number of forks/number of issues as indicators of community involvement

Recent code commit activity to verify the project is still active

Repositories that are at least several months old to have enough time to show early signs of open-source traction

User activity on Slack/Discord for these companies’ public channels to better understand how customers are using the product & any pain points they have

Twitter activity for the startup (number of followers, quality of followers, number of mentions, post engagement)

Hacker News sentiment for a startup’s public product launch

I wanted to codify these signals and turn them into an automated system not only to make my day-to-day more efficient, but also to meet the startups before other competing VCs do. In VC-land, being able to meet a company slightly earlier (in the order of days or weeks) is often enough time for an investor to build conviction in a company/space and win the deal. Because many VCs don’t have in-house data science teams (outside of large funds that have the AUM to sustain these initiatives), I felt that whatever I built would still be useful and wouldn’t be as susceptible to alpha decay compared to systems deployed in the public markets. With that in mind, I dusted off my Jupyter Notebook, put Lewis Capaldi on repeat on Spotify, and began cranking out code.

Data cleaning and exploration

The first part of this mini-series will explore how I went about defining my search space, exploring the data I pulled, and curating the resulting projects of interest that I plan on following up on. For this project I used Python with the usual libraries (pandas, requests, matplotlib, seaborn) and the GitHub API.

To start off, I knew the search space was massive—Runa Capital has a good article explaining how there were over 24,000 repositories over 1000+ stars even as early as Q2 2020. While it’s interesting to look at GitHub repositories with high star counts, I wanted to unearth projects that were just getting off the ground. The challenge for me was narrowing down these repositories to something more tractable.

To do this, I constrained my initial search to the following:

Search repositories that were created 4+ months ago (see the illustration above) to give projects enough time to grow

Limit to an 8-month search window (e.g. beginning of August 2021 to beginning of April 2022). The rationale for this is other VCs with data science teams have probably reached out to projects over 1 year old, so I would need to target “fresh” projects

A minimum/maximum range for repository specific information such as number of stars, number of forks, and number of issues. These metrics are designed to ensure repository popularity and community engagement

A least one Git push over the past month to ensure the project is still alive.

I ran into API rate limits pretty quickly. I got around this by “chunking” my searches into smaller date ranges, saving results into pickle files and retrying the API requests that failed.

Using my search constraints, I was able to generate 2055 repositories, a subset of which is shown.

I then began exploring the data, first by looking at the GitHub star distribution amongst the 2055 repositories. We see here that over 50% of the repositories have <= 518 stars, and that there’s a good contingent of repositories that have over 1000 stars.

After that, I looked to see if there was any correlation between the number of stars vs. number of forks. Which unfortunately didn’t give me too much additional information.

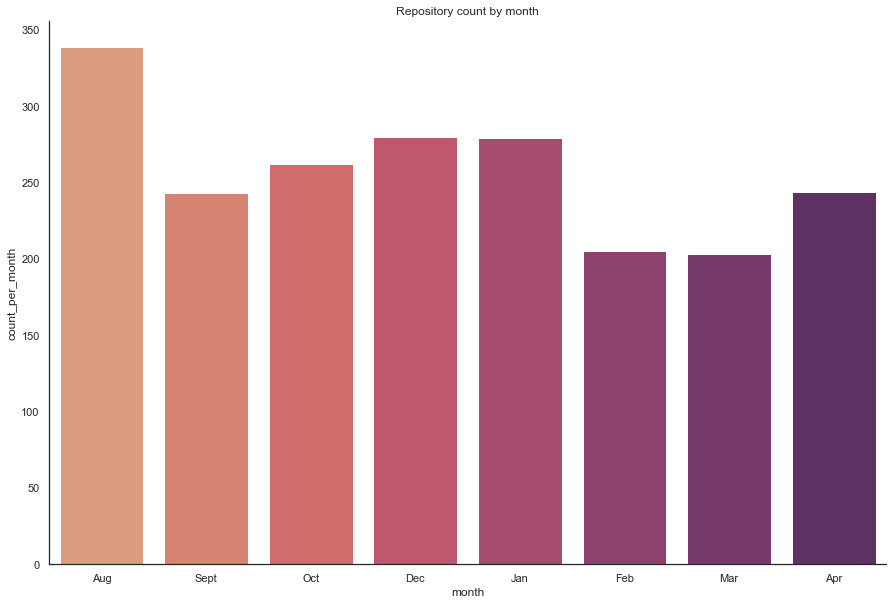

I built the same scatter plots for number of stars vs. number of issues and number of forks vs. number of issues. There wasn’t a ton of clustering effects but I did note a number of outliers (high number of forks and high number of issues) that warranted further investigation. I next graphed the distribution of GitHub stars by month via a box plot, from August to April – the results were a bit surprising because there was actually a good number of projects were started in April 2022 that had a high star count even compared to the repository count in August 2021.

As an example, there were 339 projects from August 2021 that fit my search criteria vs. 244 in April 2022. My working theory was that the star growth for many projects is probably faster than I had anticipated, and that older projects with star counts in my search constraints were actually the slow growers. In future iterations of this project, I would need to implement a sliding scale in terms of my search query (higher star count requirement for older repositories compared vs. lower counts for newer ones). Once we break down the repositories by the month they were created and aggregate their count by month, we see that this is indeed the case.

Finally, to narrow down my search space of organizations I actually plan on reaching out to, I filtered the repositories that were in the top-75 percentile of star count, number of issues, and number of forks. This yielded 101 repositories—a much more tractable number of repositories for me to manually explore. After scanning these repositories, a number of projects already look like promising leads—leads that I probably couldn’t have surfaced otherwise! The next step for me would be to actually reach out to these organizations to see if they fit within my investment scope.

Future work

This was my first stab at using more analytical approaches to surfacing interesting open-source startups, and there are a number of enhancements that I plan on implementing in subsequent versions of the system to generate more accurate sourcing leads. These include:

Set up cron jobs to periodically extract new repositories. I’m thinking about using something like Temporal to more gracefully handle failures

Correlate GitHub data with 3rd party signals like Hacker News’ product launch sentiment, job postings, and Twitter popularity

Build various derived signals (e.g. star growth over time, contributor concentration, contributor quality, etc.) and see if I could run something more advanced like k-means or principle component analysis

Scrape open-source projects that have received significant funding and backtest to see if we can “predict” the success of certain early-stage projects

Build a web-app that aggregates additional signals to give me a bird’s eye view of my sourcing efforts. I’ve been playing with Render recently, which has a generous free tier courtesy of well-heeled investors like Addition and Elad Gil

And that concludes Part 1 of this mini-series! If you’re a founder working on cloud infrastructure or enterprise SaaS, want to chat about the prolific use of flashbacks as plot devices in k-dramas, or want to debate the best off-menu items at Philz Coffee, please don’t hesitate to reach out! I can be reached on Twitter or LinkedIn!

Thank you to Will L., Maged A, and IB for the edits!

Super interesting! I know VC who automated sourcing on LinkedIn using location/school/job as filter. Curious on how you will further cut down noise from the results.