Frontier AI Labs: the Call Option to AGI

Since the launch of ChatGPT less than three years ago, the pace of AI development has only accelerated. Over the past six months, the intensity of research, funding, and product announcements has seemingly gone parabolic. OpenAI's recent $40 billion funding round, DeepSeek’s disruptive R1 model, Google’s formidable Gemini 2.5 Pro, and, hot off the press, Meta poaching Scale AI Co-Founder Alexandr Wang for $14B to run its superintelligence lab, all serve to highlight this relentless pace. At the foundational model layer, startups, investors, and tech incumbents are clearly going all in.

The critical questions now are: what’s the endgame for this layer of the stack, and where will value accrue? Will it be the Goliaths like Google, who already own the entire technology stack and have existing distribution, or will it be the Davids (frontier AI labs like OpenAI and Anthropic)? In this post, I argue that for AI labs to justify their valuations (OpenAI at $300B, Anthropic at $61.5B, and xAI selling secondaries at a $113B), they must execute a multi-pronged strategy: capture advertising revenue from incumbents, build a defensible enterprise business, and invest in moonshot endeavors.

In this post, I cover the following:

The current state of VC investing at the foundation model layer, and why large fund sizes and frontier AI labs are a match made in heaven

A general framework for evaluating the revenue drivers for frontier AI labs, from consumer, to API, to service revenues. I present this as a war waged on three fronts

Front #1 (Consumer): I highlight that while paid subscriptions for apps like ChatGPT currently drive the bulk of revenue, frontier AI labs will ultimately need to monetize their free users and take incumbents’ market share

Front #2 (API): On the enterprise side of things, API revenue growth will plateau in the medium term

Front #3 (Service Revenues): therefore, AI labs have to move to the application layer and capture service revenues. This has broad ramifications for the rest of the tech ecosystem

The fate of the “rest” (xAI, Mistral, Cohere, Sakana, etc.)

Frontier AI labs are also a “call option” for further asymmetric upside as AI fundamentally changes consumer behavior and automates broad swathes of human labor

Let’s dive in.

The current state of investing at the foundation model layer is one of extreme capital intensity, with VCs and strategic investors (e.g. Google, Amazon, Microsoft, Salesforce) collectively ripping tens of billions of dollars into foundation model startups / frontier AI labs (I’ll use these two terms interchangeably). This is even with the knowledge that failure means incinerating billions in capital. Companies have already started dropping out of the race, either pivoting out of training frontier models altogether (Cohere), or exiting via acqui-hires (Adept, Inflection). The interesting dynamic here is the strategy VCs are taking to invest at this layer — either investing in multiple companies, or going all in on one company.

Despite this, frontier AI labs are perfect investment targets for VCs with massive AUMs, because these funds can plow billions into these startups and still have a reasonable shot at generating venture-scale returns. This means tens of millions of dollars in management fees per year and potentially billions in carry! But as I wrote about in my prior piece, investing at the foundation model layer is a game reserved for a very small number of funds. Smaller funds (e.g. a “classic” $500M Series A firm) are simply priced out. Furthermore, investing at this layer is exceptionally dangerous, because it assumes that frontier AI labs can perfectly execute both their consumer and enterprise lines of business.

Understanding Value Creation at the Foundation Model Layer

My general framework for understanding how to “justify” frothy valuations for foundation model players like OpenAI, Anthropic, and xAI is by breaking down their revenue drivers, of which there are four:

Consumer: apps like ChatGPT, Claude, and Gemini generally have 3 “tiers” of plans, a free tier (potentially supported via ads), a paid tier (e.g. $20/user/month), and a pro tier that ranges from $200-250/user/month

API revenues: charged per call on a number of input / output tokens basis. Right now, vendors have some pricing power on high-end models, but we’re already seeing significant pricing pressure on low / mid-tier models

Enterprise applications / human labor replacement: build / buy their own horizontal applications. OpenAI is an early mover in this direction

“Moonshots”: currently in its early days, but frontier AI labs have shown a willingness to enter the world of atoms, whether it’s hardware, humanoid robotics, consumer devices

In the next few sections, I will highlight the wars currently waged on three fronts: Consumer, API, and Service Revenues. The longer horizon / moonshot initiatives, meanwhile, promise to fundamentally change the fabric of society and how humans interact with technology.

Front #1: the Consumer Battle for Attention (and Ad Dollars), and the Quest to Disrupt Incumbents

General purpose consumer apps (e.g. ChatGPT / Anthropic Claude / Gemini) primarily derive their revenues from two sources—advertising and paid subscriptions. While paid subscriptions currently drive most of consumer revenues, there’s a natural ceiling to the number of users willing to pay for something like ChatGPT. In the longer term, I expect the bulk of revenues to be derived from the integration of advertising into consumer generative AI experiences.

To get a sense of WHY paid there’s a limit to consumer subscriptions’ revenue potential, I run a sensitivity analysis using the following assumptions:

5% of all users using apps like ChatGPT use a paid vs. free tier. This is an optimistic assumption, as OpenAI had about ~20M paying users when its total user base was ~500M (a monetization rate of under 5%)

Of these customers, 95% of all customers pay for the basic tier (e.g. ChatGPT Plus, currently at $20/month)

The remaining 5% of paying users pay for the professional tier

The “pro” tier costs roughly ~10x more than the base paid tier (ChatGPT Pro costs $200/user/month and Gemini Ultra costs $250/user/month)

As a note:

The global population is ~8.2B, but Western products will likely not be able to enter countries like China and Russia, effectively taking ~1.5B users off the market, although Chinese users have ways of accessing US AI services via VPNs and resellers

ChatGPT has ~1B users already while Google’s Gemini already has ~400M users

The sensitivity analysis below estimates the total global revenues for paid consumer users, with the sensitized parameters being i) the monthly subscription cost for the entry-level paid consumer tier (the $20 base tier corresponds to a $200 pro tier) and ii) the global consumer generative AI penetration rate (ranging from 2.5B to 5B).

With aggressive generative AI penetration and consumer app price increases, this results in something like ~$1T in net new enterprise value creation assuming a 10x revenue multiple (at the midway-point in red). If we add the private market marks for top AI companies, it’s almost ~$500B, so there’s not a ton of growth left from a venture perspective. Therefore, investors are clearly pricing in AI labs’ ability to monetize free users. We’re seeing early evidence of this — OpenAI recently teased its upcoming shopping experience on ChatGPT, and is rumored to be working on a social networking product. xAI, with its merger with X, is poised to be another credible player if it can come up with interesting product directions.

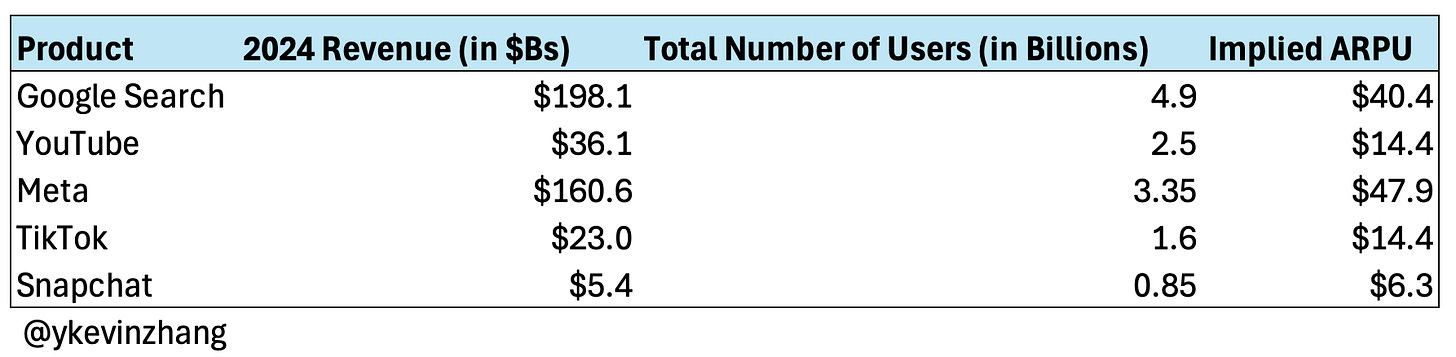

This strategy puts them in direct competition with incumbents like Google and Meta, who generate massive revenues from their existing search and advertising businesses (see above). The incumbents aren't sitting idle—Google has aggressively rolled out Gemini in recent months, while Meta's standalone AI app has climbed rapidly in app store rankings. What’s working against startups is that the incumbents have existing cash cows and can absorb the billions required to serve free users, while the list of startups that can raise this scale of capital is shrinking.

To get a sense of this cost, we can look at the tokens processed by incumbents Google and Microsoft. This past April, Google processed 480T tokens, a ~50x YoY (year-over-year) growth. Microsoft, meanwhile, processed ~100T in Q1 2025, representing a 5x YoY growth.

We can use Google’s cost to serve Gemini as an example:

I used several popular models, listed below

The ratio of input to output tokens is 3:1 (following the methodology used by Artificial Analysis to conduct its benchmarks)

An 80% volume discount off list price is applied for both input and output tokens (actual cost to serve varies across vendors)

Token generation prices are accurate as of early June 2025

As we can see here, the only startups that can “eat” these costs at this point are OpenAI, Anthropic, and xAI. Another interesting, albeit unrelated, takeaway is Google’s Gemini 2.5 Pro pricing. Its frontier model is significantly cheaper than equivalent models from OpenAI and Anthropic (until literally this week, when OpenAI dropped its o3 pricing by 80%!). This is primarily due to Google’s decade-long investment in TPUs.

The open questions consumer revenues for me are:

How much revenue can AI labs capture from incumbents, and is there enough human attention to go around for both incumbents and challengers’ products?

How will generative engine optimization (GEO) affect foundation model vendors' monetization potential? If GEO allows advertisers to target users more precisely, resulting in higher conversions, what does ARPU look like?

While it’s unclear whether there’s significant headroom for ARPU growth, consumers paying for AI tools does imply that there’s a shift in behavior and an increasing willingness to pay for software. Frontier labs and incumbents alike will likely try to extract this “additional” value by charging advertisers more, thereby driving up ARPU.

Front #2: APIs are currently the dominant source of enterprise revenue, but it’s a capped market

Moving on to API revenues, the current dominant enterprise monetization motion for companies like OpenAI & Anthropic is their API business. Looking at the two biggest startups in the space: OpenAI is projected to generate ~$12.7B in revenue in 2025, up 3x from the prior year, and is expected to ~2.3x yet again to ~$29B in 2026. Anthropic, meanwhile, tripled its annualized run rate since December 2024, hitting $3B by the end of May. The interesting dynamic here is that most of OpenAI’s revenues come from ChatGPT, while most of Anthropic’s revenues come from its Claude APIs. Both of these companies, however, are seeing significant growth from their API businesses. But how big can API revenues get?

There are three forces at play here:

Software becoming more AI native, resulting in more AI API usage

A shift to reasoning models means that more tokens need to be generated per API call

Inference costs are falling at ~10x YoY for the same capability

The framework I use is modeling out two “extreme” outcomes:

On one extreme, we might see an explosion in the use of AI agents using reasoning models to tackle high-end workloads. In this world, we might see something like 10-100x YoY tokens generated (similar to Google’s 50x YoY growth). Here, API revenues might grow 10x YoY even if inference costs are falling 10x.

Or, we see moderate growth (e.g. closer to 10x YoY tokens generated). In this scenario, global LLM API revenues will actually be flat because of falling inference costs!

The way I think the market will play out is that global AI demand will continue to see rapid growth in the short to medium term, driven by the first two forces highlighted above. But, over time, even with the extensive adoption of AI agents, a growing set of use cases will be serviced by more cost efficient, non-reasoning models. Already, use cases like summarization and translation can be solved with existing, non-frontier, models. Therefore, global spend on model API calls will likely be capped at the tens of billions annually and spread across multiple model companies and inference providers running open-source models (which I had written about last year). This is not nearly enough to support trillion dollar outcomes.

Front #3: enterprise success depends on replacing human labor

So how do frontier AI labs capture additional revenues? Outside of monetizing free users at scale via advertising, the most obvious angle is via services revenues. This is the main reason why VCs and founders are so excited about AI agents. The promise of AI agents is that they can automate human labor. In the US alone, there’s ~$4T in service wages that will be impacted by AI. In the broader Western world, excluding the US, there’s at least another $4T in service wages (the EU alone has $14T of its roughly $19T GDP from service industries, though it’s unclear what percent of this will be impacted by AI). This means there are at least hundreds of billions in net new revenues that can be captured, as estimated via the following assumptions:

$8T in service wages in the Western world that will be impacted by AI

20% of jobs currently done by humans will be eliminated by AI in the medium term

AI services can capture 10-30% of the ROI of replacing human labor

This results in ~$320B in net new revenues from replacing human labor (assuming 20% of the ROI is monetized). But that’s not all! There’s $390B in existing SaaS revenues that stand to be disrupted, especially if AI agents replace per-seat pricing models (the question here is how much of these revenues can be defended by existing store of record behemoths like Salesforce or ServiceNow). This doesn’t even include “jobs to be done” that weren’t economical before the age of AI. One trivial example is an SMB using AI to parse through legal databases without needing to pay for expensive paralegals (this “job” may simply have not been done pre-AI). All told, this represents up to ~$7T in enterprise value that needs to be reallocated to the winners (assuming a 10x revenue multiple), including at least $3T+ from services revenues.

Given this dynamic, I expect frontier labs to be increasingly aggressive at the application layer & competing with their downstream customers. We’re already seeing this play out with OpenAI acquiring Windsurf to directly enter the AI code generation market. Another example is the launch of ChatGPT’s meeting recording & summarization feature, bringing OpenAI directly in competition with a number of AI startups. Going forward, frontier labs will likely prioritize first-party applications, and become hostile towards perceived competitors (e.g. Anthropic locking Windsurf out of its models after Windsurf's acquisition). This is probably what Sam Altman meant when he talked about steam-rolling AI companies! I think most horizontal AI companies fall under this “blast radius” (e.g. vanilla text, code, video, image, audio generation).

What will be very interesting is how large these AI application companies can get. The current dynamic is that incumbents that are vertically integrated (e.g. Google / Microsoft / Amazon compete from the infrastructure to the application layer) are about ~10x bigger than the largest SaaS companies (e.g. Salesforce and ServiceNow). Bret Taylor (Sierra co-founder, ex-CEO of Salesforce) seems to think that in the Age of AI, application makers can capture more of the value and potentially mint the first trillion dollar application company. I’m inclined to take the other side of the bet — I don’t think frontier labs would sit idly by and become dumb AI “pipes”.

The fate of the rest (Mistral, xAI, Cohere, etc.)

What happens to all the other frontier AI labs? Here, I like to use the concept of “escape velocity”. In SaaS, escape velocity means that once a company has reached a certain revenue scale, it becomes extremely difficult for a new SaaS product to catch up. It’s unclear what this escape velocity is for foundation models — is it when multiple companies, like Anthropic and OpenAI, have reached billions or over ten billion in revenue? Or, is it an insurmountable funding lead? The search engine wars serve as another case study, with a single winner (Google) taking most of the market, and leaving everyone else (Yahoo, AltaVista, Lycos, Excite, Ask Jeeves, etc.) in the dust.

I think these players will become national or continental champions, in the case of Cohere in Canada, Mistral in Europe, and Sakana in Japan. xAI is the only credible competitor left, assuming Elon can continue to fundraise. SSI and Thinking Machines, meanwhile, represent additional “exposure” for VCs who might balk at OpenAI / Anthropic’s valuations or want to diversify risk. Though, in SSI’s case, Ilya may have found a different mountain to climb.

Hardware, Consumer Devices, Robotics, and AGI

The most exciting aspect of these frontier AI labs is their "call option" to additional asymmetric upside. These companies are innovating at an unprecedented pace. OpenAI's recent moves are particularly telling - from exploring custom semiconductor development to their acquisition of Jony Ive's LoveFrom for consumer hardware design to its massive data center build-outs to rebuilding their robotics team. xAI, meanwhile, famously constructed a 100,000 GPU data center cluster in 122 days, and its Grok models are already performing near state of the art. While Anthropic hasn't made such splashy moves yet (beyond things like Project Ranier), I expect it to expand beyond its predominantly API business, or risk getting left behind.

This strategy makes sense. Frontier AI labs are incentivized to capture value at every layer of the stack, from the model layer (which they can optimize cost / performance with custom hardware), to the application layer, to consumer devices, to robotics. This means that the potential outcomes for these labs might even be larger than the current $2-3T incumbents! And if AGI / ASI (artificial superintelligence) were to be achieved, then the services and labor revenues that frontier labs can extract could be significantly larger than my estimates above.

That being said, incumbents like Google are structurally positioned to win given their existing tech and distribution, which already reach billions of people. Private market investors, however, are taking the other side of the bet as their David looks to topple incumbent tech Goliaths. Should any of these frontier labs succeed, then investors would finally get their 10-100x returns on tens of billions of dollars deployed.

Huge thanks to Will Lee, John Wu, Ying Chang, Yash Tulsani, Lisa Zhou, Maged Ahmed, Wai Wu, and Andrew Tan for the feedback on this article. If you want to chat about all things investing, ML/AI, and geopolitics, I’m around on LinkedIn and Twitter!